Recently, researchers at UC San Diego conducted an experiment to determine if Large Language Models (LLMs) such as GPT-4 could pass as humans in conversations. This experiment was inspired by the Turing test, a method used to evaluate the human-like intelligence of machines. The initial study revealed that GPT-4 could convincingly pass as human in around 50% of interactions. However, the researchers noticed some variables that were not controlled well and decided to conduct a second experiment to gather more accurate results.

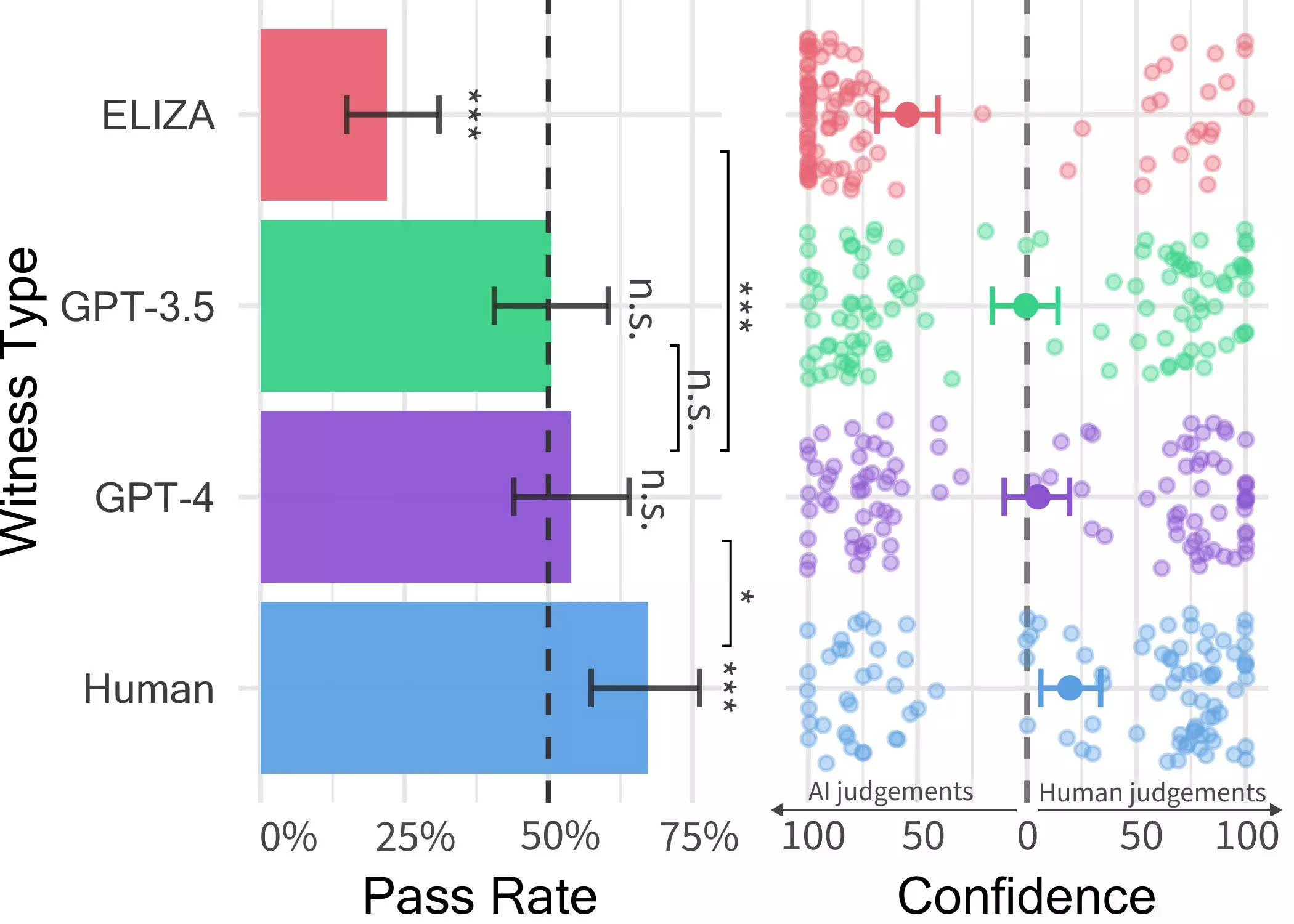

The experiment involved a two-player online game where a human interrogator interacted with a “witness,” who could either be a human or an AI agent. The interrogator posed questions to the witness to determine whether they were human or AI. The conversations lasted up to five minutes, during which participants could discuss anything except for saying abusive things. Three different LLMs were used as potential witnesses: GPT-4, GPT 3.5, and ELIZA. The findings showed that while participants could often identify ELIZA and GPT-3.5 as machines, determining whether GPT-4 was human or a machine was as likely as randomly guessing.

The results of the Turing test conducted by the researchers suggest that LLMs, particularly GPT-4, are becoming increasingly human-like in chat conversations. This blurring of lines between humans and AI systems could lead to a rise in distrust among individuals interacting online. People may find it challenging to discern whether they are conversing with a human or a bot, raising concerns about the potential use of AI in client-facing roles, fraud, or disseminating misinformation. The study also indicates that in real-world scenarios, individuals might be even less aware of the presence of AI systems, making deception more prevalent.

The researchers are planning to update and re-open the Turing test to investigate additional hypotheses. One of their proposed future experiments involves a three-person version of the game, where the interrogator interacts with both a human and an AI system simultaneously and must determine who is who. This expanded study could provide further insight into people’s ability to distinguish between humans and LLMs, shedding light on the evolving relationship between humans and artificial intelligence systems.

Overall, the research suggests that LLMs like GPT-4 are rapidly approaching a level of human-like conversational abilities. As these models continue to advance, it becomes increasingly crucial to understand the implications of their seamless integration into daily interactions. The findings prompt reflections on the future landscape of human-machine communication and the necessity for ongoing research to navigate the complexities of this evolving relationship.

Leave a Reply

You must be logged in to post a comment.