Recent research conducted by Xunyu Chen, an assistant professor at Virginia Commonwealth University, delves into the intricate world of deception and trust cues. Chen and his team utilized data from a 2002 game show, “Friend or Foe?” to develop a computerized system capable of detecting lies. By leveraging artificial intelligence techniques like machine learning and deep learning, the researchers were able to extract valuable information from human behaviors to enhance decision-making processes.

The study, titled “Trust and Deception with High Stakes: Evidence from the ‘Friend or Foe’ Dataset,” contributes to the exploration of high-stakes deception and trust quantitatively. Unlike traditional lab experiments that may lack realism and generalizability, the game show dataset offered a glimpse into scenarios where the stakes were significantly higher. Participants faced the dilemma of either cooperating for mutual benefit or betraying one another, highlighting the complexities of trust and deception in decision-making.

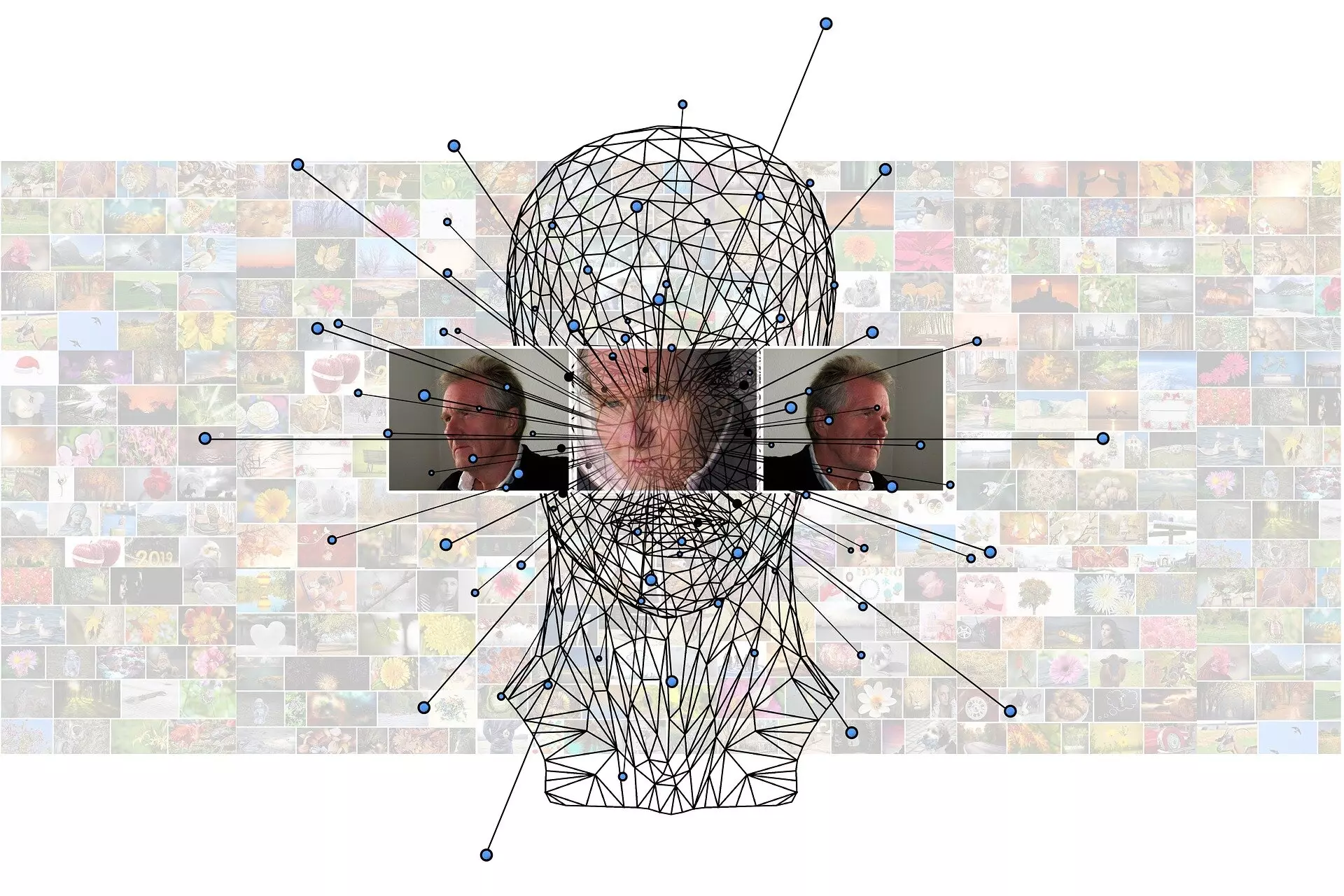

Through their analysis, Chen and his team identified multimodal behavioral indicators associated with deception and trust in high-stakes environments. These indicators, including facial expressions, verbal cues, and movement fluctuations, can be used to predict deception with a high degree of accuracy. The development of an automated deception detector opens up new possibilities for understanding human behavior in critical situations and predicting outcomes based on behavioral cues.

The findings from this research have implications for various real-world scenarios, such as presidential debates, business negotiations, and court trials. By applying the insights gained from studying high-stakes decision-making, researchers and practitioners can better analyze human behaviors in situations where deception and trust play crucial roles. The ability to predict deceptive behavior accurately can aid in safeguarding self-interests and making informed decisions in contexts where the stakes are high.

The research led by Xunyu Chen sheds light on the complex interplay between deception and trust in high-stakes decision-making. By leveraging data-driven techniques and analyzing behavioral cues, researchers can gain valuable insights into human behavior and enhance their ability to anticipate deceptive practices. The development of automated deception detection systems represents a significant step forward in understanding the nuances of human behavior in critical scenarios.

Leave a Reply

You must be logged in to post a comment.