As technology evolves, companies face the dual challenge of enhancing security and maintaining user privacy. Meta Platforms, Inc., formerly known as Facebook, has historically found itself at the crossroads of these two imperatives, especially when it comes to facial recognition technology. Recently, Meta announced a series of tests involving facial recognition processes designed to combat scams that leverage images of celebrities to lure unsuspecting users. However, given Meta’s contentious history with privacy and facial recognition, this renewed engagement raises pertinent questions about security, ethics, and user trust.

Meta’s latest initiative aims to thwart scams involving “celeb-bait,” a method where fraudsters use images of public figures to attract clicks on malicious advertisements. By employing a facial matching process, Meta hopes to compare the faces in ads with those of high-profile individuals on Facebook and Instagram. If a match is confirmed, Meta’s systems will immediately investigate the legitimacy of the promotion. According to the company, any facial data used in this checking process will not be stored, ensuring a one-time-use policy—even in the event that a match is detected.

This approach highlights the duality of facial recognition; while it can serve as a robust tool for securing users, it also wades into the murky waters of privacy concerns. The history of missteps in the use of facial recognition by Meta and others makes this endeavor particularly scrutinized—especially in light of past incidents that have led to public distrust toward such technologies.

Meta’s troubled history with facial recognition was punctuated by a significant policy shift in 2021 when it completely halted its face recognition processes. The decision was primarily a response to widespread public backlash and regulatory pressures surrounding the potential misuse of facial recognition data. Instances of invasive surveillance, such as those witnessed in authoritarian regimes employing facial recognition for social control—were alarming precedents that contributed to a climate of skepticism.

For example, the Chinese government’s extensive use of facial recognition to monitor and penalize citizens has been widely criticized, particularly in the context of human rights abuses against minority populations. The fear is that facial recognition technology could facilitate similar abuses if proper checks are not put in place. This historical context cannot be ignored as Meta now reintroduces aspects of facial recognition technology, prompting both technologists and regulators to question how this will be governed moving forward.

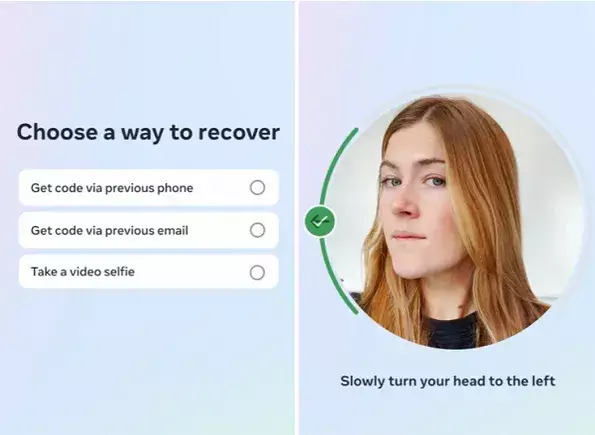

In addition to combating scams, Meta is testing the use of video selfies for identity verification, an essential feature for users locked out of their accounts. In this process, users can upload a video selfie, which Meta will compare against existing profile pictures. Like its scam-fighting initiative, Meta promises that video selfies will be securely encrypted and swiftly deleted after the comparison process is completed.

While the intentions behind utilizing video selfies align with user security, the implications remain complex. Critics argue that while Meta claims not to retain any data, the reality of technology often diverges from articulation. The tension between user convenience and privacy rights becomes palpable, as even well-meaning initiatives can inadvertently violate trust if mishandled or if security breaches occur.

As Meta continues to explore facial recognition technology, the ethical implications cannot be overlooked. The company will likely face increased scrutiny from regulators and privacy advocates alike, particularly regarding how they manage and store user data. Previous controversies cast a long shadow, and as Meta endeavors to position itself as a security-conscious platform, it must also work toward rebuilding its reputation.

The question arises: Should Meta pursue further implementation of facial recognition technologies? Although there is valid reasoning for facial ID’s potential to enhance security, the risks are substantial. Data misuse and invasive verification methods could exacerbate user fears and further diminish trust in the platform.

Ultimately, a balanced approach that prioritizes user privacy and transparent data handling can help mitigate risks. Meta must engage in ongoing dialogue with stakeholders, including privacy experts and users, to guide the ethical implementation of facial recognition in a way that respects individual rights while addressing security needs.

Meta’s renewed interest in facial recognition technology comes at a pivotal moment. The initiatives aimed at safeguarding users against scams and enabling easier identity verification are promising; however, the specter of privacy concerns looms large. For Meta, success will depend not just on the robust functionality of these systems, but on the company’s ability to reassure users that their privacy is of utmost priority. In navigating these choppy waters, proactive communication and ethical governance will be crucial in defining the future relationship between Meta and its users.

Leave a Reply

You must be logged in to post a comment.