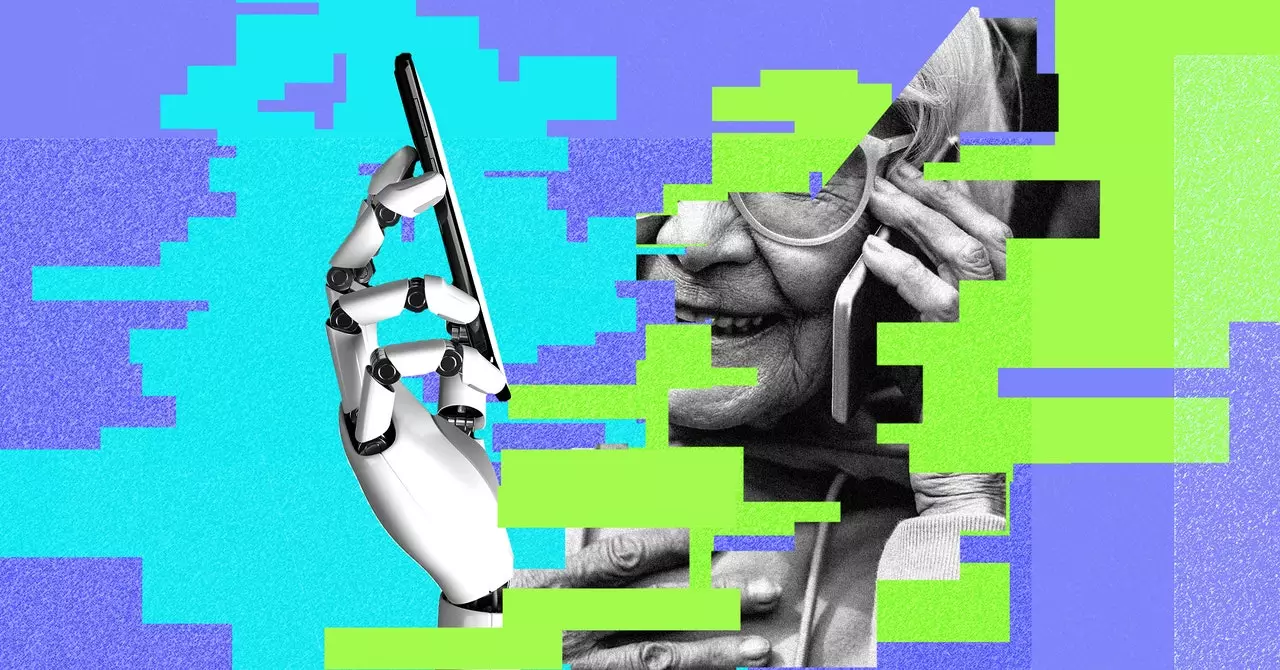

In today’s digital age, scammers have found a new tool to exploit unsuspecting victims – artificial intelligence voice cloning. These AI tools are becoming increasingly sophisticated, making it easier for scammers to create fake audio of people’s voices that are incredibly convincing. By using existing audio clips of human speech, these AI voice clones can imitate almost anyone, including your loved ones.

OpenAI, the creator of ChatGPT, recently introduced a new text-to-speech model that promises to further enhance voice cloning capabilities and make it more accessible to scammers. Other tech startups are also working on replicating near-perfect human speech, with rapid progress being made in the field. This means that traditional methods of detecting fake calls, such as listening for pregnant pauses or latency, are no longer reliable.

Security experts emphasize the importance of taking proactive measures to protect yourself from AI voice cloning scams. One effective strategy is to always verify the caller’s identity before providing any sensitive information or sending money. If you receive an unexpected call asking for money or personal details, ask to call them back using a verified number or initiate contact through another secure channel like video chat or email.

To add an extra layer of security, consider establishing a safe word with your loved ones that can be used to verify their identity over the phone. This safety measure can be particularly helpful for vulnerable individuals, such as young children or elderly relatives, who may find it challenging to communicate through other means. By prenegotiating a unique word or phrase, you can quickly authenticate the caller in case of emergency.

If you’re unsure about the authenticity of a distressing call, take a moment to ask a personal question that only your loved one would know the answer to. This could be a specific detail about your shared experiences or memories that a scammer wouldn’t be able to replicate accurately. By carefully testing the caller with personalized questions, you can verify their identity and avoid falling victim to AI voice cloning fraud.

It’s essential to recognize that AI-powered scams are not limited to high-profile figures like celebrities or politicians. Everyday individuals, including yourself, are also at risk of having their voices cloned by malicious actors. With just a few seconds of your voice captured from online platforms or recordings, scammers can create convincing audio clones to deceive unsuspecting victims.

The threat of AI voice cloning scams is real and evolving rapidly. By staying alert and implementing precautionary measures like verifying the caller’s identity, using safe words, and asking personal questions, you can protect yourself from falling prey to malicious schemes. Remember that scammers rely on emotional manipulation and a sense of urgency to exploit their targets, so taking a step back to evaluate the situation can make all the difference in avoiding a potential scam. Stay vigilant, stay informed, and stay safe in the digital world.

Leave a Reply

You must be logged in to post a comment.