In the rapidly evolving landscape of artificial intelligence, the Chinese startup DeepSeek is carving a niche for itself by producing groundbreaking open-source models that challenge established industry giants. Emerging from the quantitative hedge fund High-Flyer Capital Management, DeepSeek aims to democratize AI technologies, aspiring to contribute to the development of artificial general intelligence (AGI)—a visionary goal where machines can perform any intellectual task that a human can. Their latest offering, DeepSeek-V3, represents a significant leap in this mission.

DeepSeek-V3, as a continuation of its predecessor DeepSeek-V2, introduces a state-of-the-art architecture designed to effectively harness vast computational resources. With a staggering 671 billion parameters, DeepSeek-V3 stands out in its ability to optimize performance through a mixture-of-experts (MoE) architecture. This innovative design allows the model to activate only specific parameters needed for particular tasks, promising tailored efficiency and accuracy. Notably, the model has already bested various leading contenders in the AI space—primarily open-source models like Meta’s Llama 3.1 and even comes close to competing with proprietary models from tech giants such as OpenAI and Anthropic.

DeepSeek-V3 is not just another model boasting a high parameter count; it introduces significant advancements that enhance both its training and operational capabilities. The auxiliary loss-free load-balancing strategy is a pioneering feature that intelligently manages the activation of its expert parameters thereby maximizing potential without sacrificing overall performance.

Furthermore, the multi-token prediction (MTP) feature distinctly amplifies the model’s efficiency, enabling the simultaneous prediction of multiple future tokens. This results in an impressive processing speed of 60 tokens per second—a major increase from what has typically been achievable with earlier models. Such breakthroughs not only expand the capabilities of the model but also indicate a shift in how AI could perform complex tasks in real-time.

The developmental journey of DeepSeek-V3 involved significant investments in both data quality and computational resources. By training on a massive dataset of 14.8 trillion tokens, DeepSeek emphasizes the importance of quantity and diversity while preparing its models for real-world applications. Adding further complexity, DeepSeek implemented a two-phase expansion of context length—first extending to 32,000 tokens and then to an astounding 128,000 tokens.

Impressively, the entire training process was achieved within an estimated cost of $5.57 million— a fraction of the hundreds of millions typically required for training similar large-scale models. This economical training process is crucial for the sustainability of the open-source AI sector, as it challenges the prevailing cost structures utilized by major closed-source players.

Benchmarking data reveal that DeepSeek-V3 excels in various evaluations, including math-centric and Chinese language tests, establishing itself as a formidable opponent to both open-source and closed-source models. While it performed remarkably well against its peers, it noted minor shortcomings in certain English-focused benchmarks where OpenAI’s GPT-4o previously held an advantage. Even so, the broader implications of DeepSeek-V3’s capabilities resonate powerfully, underscoring the progress of open-source solutions in narrowing the performance gap with proprietary AI technologies.

The rise of DeepSeek and its innovations espouse a pivotal transformation in the AI arena. With DeepSeek-V3’s introduction, we see a potential paradigm shift; the competitive field of AI is expanding, creating opportunities for diverse models to coexist with closed systems from dominant players. Enterprises and developers now have robust alternatives, which empowers them to strategize the integration of AI technologies into their operations without monopolizing dependencies on a single provider.

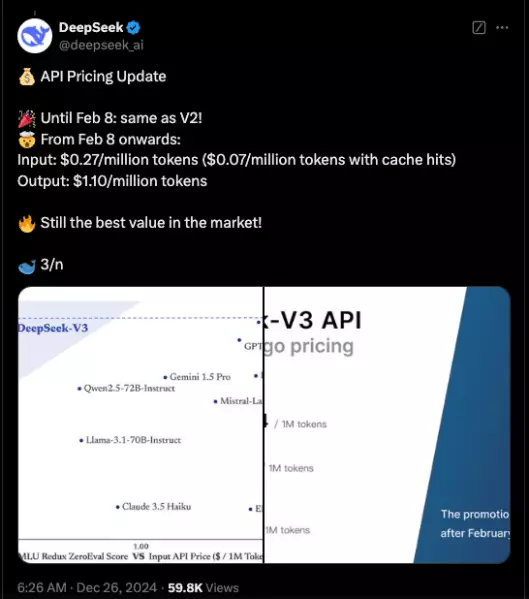

As DeepSeek plans to maintain its momentum by open-sourcing the model via GitHub and providing APIs for commercial use, its work embodies a commitment to fostering an environment ripe for collaborative advancement. Such efforts not only advocate for innovation but also work toward ensuring that power within the technological landscape is not concentrated in the hands of a few.

DeepSeek-V3 is more than just an expansive model; it heralds a new chapter in the pursuit of AGI and the evolution of AI as an open-source movement. DeepSeek’s iteration challenges established norms, raises the bar for performance, and ignites renewed competition in AI development. As the lines between open-source and closed-source AI continue to blur, the industry’s trajectory appears poised for increasingly inclusive progress and innovation, paving the way for a future where the capabilities of artificial intelligence are accessible to all.

Leave a Reply

You must be logged in to post a comment.