Artificial Intelligence (AI) has revolutionized how we interact with technology, particularly through the development of large language models (LLMs) like GPT-4. These powerful models have greatly improved tasks ranging from text generation to coding assistance and chatbots. At their core, LLMs operate on a simple yet effective methodology: predicting the next word in a sentence based on the preceding context. However, a recent investigation has thrown light on an intriguing area of study—how these models perform when asked to predict backward, akin to tracing the steps of causality in language.

The phenomenon, termed the “Arrow of Time,” was explored by researchers including Professor Clément Hongler from the École Polytechnique Fédérale de Lausanne (EPFL) and Jérémie Wenger from Goldsmiths, London. Their exploration began with a simple yet profound inquiry: Can AI models generate stories backward, beginning from a conclusion rather than a premise? Surprisingly, the results indicated that LLMs significantly struggled to predict previous words when given subsequent ones, revealing a clear asymmetry in processing text.

This comprehensive study involved multiple model architectures, including Generative Pre-trained Transformers (GPTs), Gated Recurrent Units (GRUs), and Long Short-Term Memory (LSTM) networks. Regardless of the model type, researchers consistently observed the same predictive bias: LLMs perform better in predicting the next word as opposed to when predicting the previous word. This consistent pattern signifies a fundamental limitation in how these models engage with language.

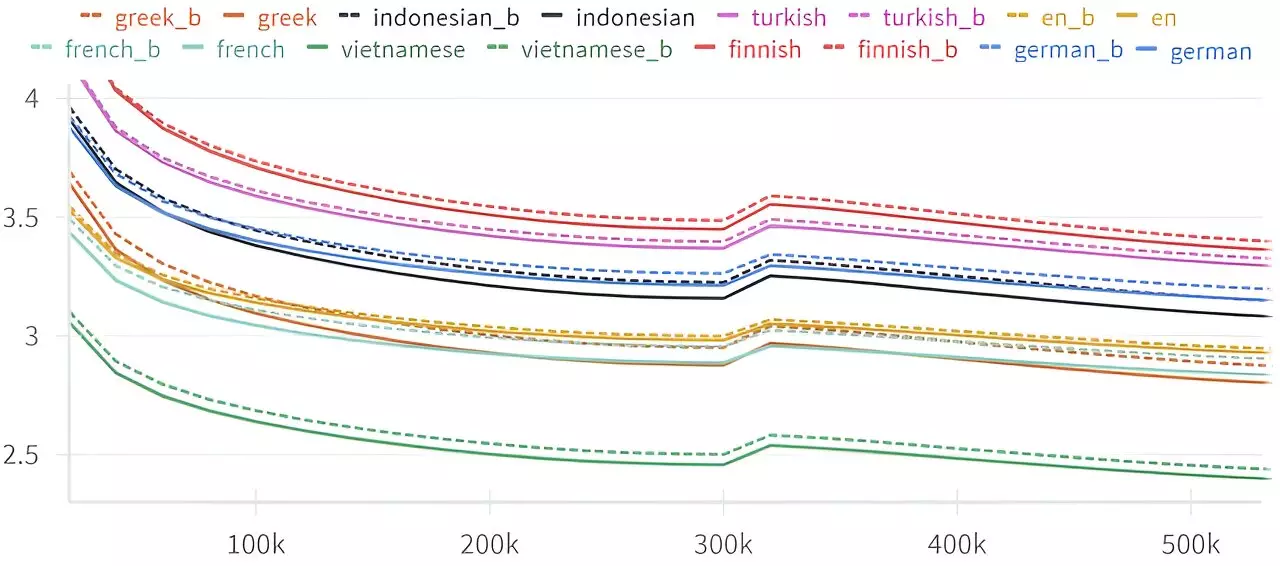

Professor Hongler elucidates that while overall predictions may appear competent in both forward and backward directions, there is always a slight edge when it comes to forward predictions—typically several percentage points. This phenomenon isn’t confined to a specific language; it is universally observable across various linguistic constructs. What does this mean for our understanding of language itself? It suggests that there are inherent structures and temporal correlations within language that LLMs capture more effectively in a forward context.

The implications of this research extend into historical perspectives as well. The study is reminiscent of the pioneering work by Claude Shannon, a fundamental figure in Information Theory. In his seminal 1951 paper, Shannon examined the symmetry—or lack thereof—in predicting letter sequences. His findings indicated that while theoretically similar, humans find backward predictions more challenging, aligning with the recent discoveries regarding LLMs. This consistent performance gap raises questions about how language and causality are intertwined and whether our understanding of intelligence in AI needs reassessment.

Interestingly, the implications of the Arrow of Time extend beyond computational linguistics. Researchers hypothesize that this property may provide insights into detecting intelligent behavior and could inform the design of more advanced LLMs. As researchers dig deeper into the causal relationships emphasized in language, they may uncover new pathways to understand broader phenomena, including the nature of time itself in physics. Such insights may change our understanding of not just language but also the fundamental mechanisms that underpin intelligence.

Moreover, the research carries potential applications in practical scenarios such as storytelling and narrative generation. The original impetus for this inquiry arose from a theatrical collaboration aimed at improvisation; imagining a narrative from its conclusion could revolutionize how stories are crafted in interactive formats. Therefore, understanding this asymmetry can enhance the design of chatbots or creative applications that require narrative coherence, all while revealing deeper cognitive connections between language and causality.

The study of the Arrow of Time in the context of artificial intelligence not only uncovers a unique aspect of language models but also prompts a reevaluation of our perceptions of language structure, intelligence, and causality. As researchers delve further into the intricate relationship between prediction and temporal direction, we may see a refinement in the way AI interacts with language, ultimately enhancing its applications across various fields. The surprising discoveries in LLM performance herald a promising avenue for research that connects the realms of cognitive science, artificial intelligence, and linguistics in unprecedented ways. The evolving landscape of AI continues to bring unexpected revelations, forging a deeper understanding of both machines and the complexities of human language.

Leave a Reply

You must be logged in to post a comment.