Large Language Models (LLMs) have become increasingly important in various domains ranging from natural language processing to biomedical inquiries. In scenarios where intricacies and specialized knowledge are required, these generalist models may deliver inaccurate responses. A newly proposed method by researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) sheds light on a collaborative approach to bolster the effectiveness of LLMs through a mechanism known as Co-LLM. This innovative system aims to create a more seamless interaction between general-purpose and specialized models, thereby enhancing the accuracy of information generated.

Collaborative learning is not just a human trait; it can also be replicated in artificial intelligence. Co-LLM operates distinctly from traditional LLM frameworks that usually rely on extensive datasets and complex rules to dictate how models should interact. Instead, it introduces a more organic learning process. At its core, Co-LLM employs a “switch variable” to determine when a general-purpose LLM should seek assistance from a specialized model. This switch acts as an intermediary, much like a project manager, ensuring that the most accurate and context-relevant information is extracted from the expert model by evaluating each word generated in the response.

For instance, when tasked with generating outputs like the names of extinct bear species, the general-purpose model begins formulating a response. However, the switch variable aids by pinpointing where the input may lack precision and where the specialized model could offer more accurate or nuanced insights, such as specific extinction dates. This synergistic interaction not only leads to enhanced accuracy but also improves computational efficiency by engaging the expert model only when necessary.

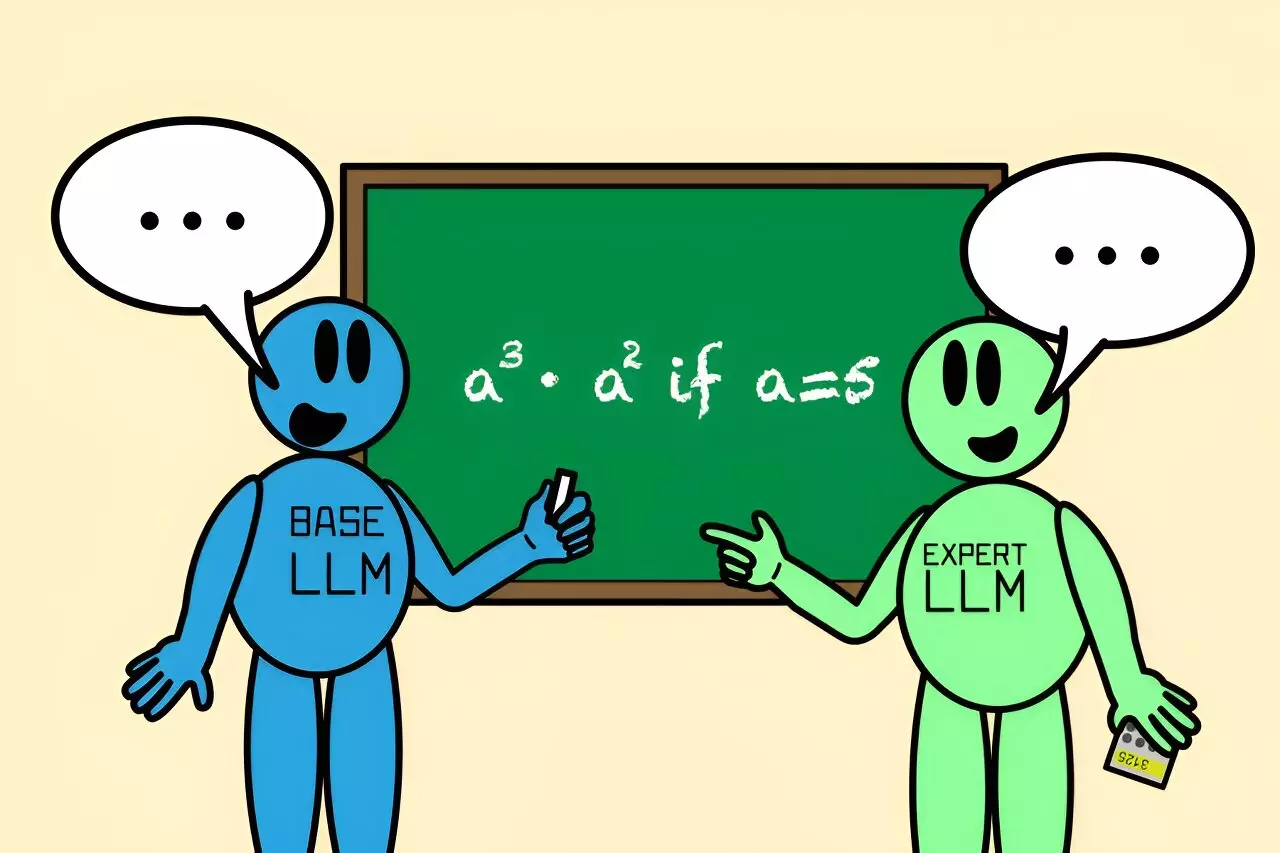

Consider scenarios that require highly specialized knowledge, such as querying the composition of a specific pharmaceutical drug or solving intricate mathematical equations. A traditional LLM might fall short or provide misleading information in these contexts, often producing incorrect outputs. Co-LLM addresses this challenge by pairing a capable general-purpose model with an adept expert model trained in relevant areas, such as biomedicine or complex mathematics.

By training the base LLM to recognize when it is venturing into territory that requires specialized input, researchers have developed a framework that mirrors human decision-making. For instance, if presented with a math problem like calculating “a³ · a² if a=5,” a basic LLM might incorrectly arrive at 125. Conversely, when using Co-LLM alongside a specialized mathematical model called Llemma, the collaboration enables them to arrive at the correct answer of 3,125. Such improvements illustrate the potential of Co-LLM to enhance the collaborative capabilities of differing models, surpassing the performance of merely fine-tuned local models or many other conventional strategies.

The future potential of Co-LLM extends well beyond immediate improvements in accuracy. One of its remarkable features is its adaptability; as models learn from a wider array of domain-specific data, they can continuously refine their ability to collaborate. This evolutionary process enables the general-purpose model to acknowledge its limitations and turn to the expert model only when necessary, thereby honing its skills further.

Furthermore, the researchers are considering systems that would allow the model to correct itself should it encounter flawed information from its expert counterpart. The ability to backtrack and reassess not only boosts factual accuracy but also encourages ongoing learning within the framework, allowing Co-LLM to remain relevant as new knowledge emerges. The potential applications of this are vast—from updating enterprise documentation to aiding smaller private models in need of powerful backup in areas where sensitive data must stay internal.

As Co-LLM matures, the impact of its collaborative learning model could resonate in numerous domains. The integration of expert knowledge with basic model comprehension opens the door to a more sophisticated handling of information across various fields. This approach could notably benefit medical professionals, educators, and researchers needing access to up-to-date data paired with strong analytical capabilities. Additionally, Co-LLM could help streamline the processes involved in creating and updating essential documents, yielding faster and more reliable outcomes.

Moreover, the collaboration framework suggests that AI isn’t merely about quantity—having large datasets—but also about quality and accuracy in knowledge application. The reflexive nature of Co-LLM in recognizing when expertise is necessary mirrors the best practices of human interactions, which could one day revolutionize how we interact with artificial intelligence.

The Co-LLM framework emerges as a promising advance in the realm of LLMs. Its strategic coupling of general-purpose and specialized models signifies a shift towards a future where AI not only performs tasks but also understands the nuances inherent in human knowledge and collaboration, emphasizing accuracy, efficiency, and the continuous evolution of learning.

Leave a Reply

You must be logged in to post a comment.