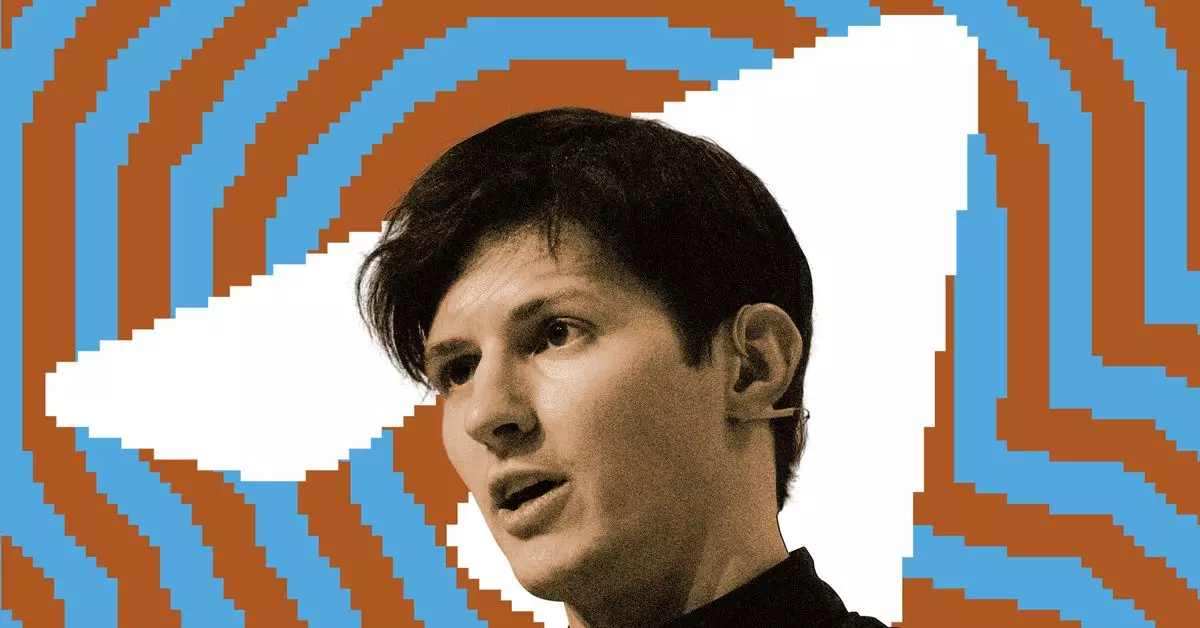

Telegram, a popular messaging app, has recently made significant changes to its content moderation policies. These changes come in the wake of CEO Pavel Durov’s arrest in France, where he was accused of allowing criminal activity to take place on the platform. Initially, the company maintained a defiant stance, claiming that they were not responsible for how their platform was being used. However, Durov’s recent statement indicates a shift in tone, acknowledging the need for increased moderation to prevent abuse of the platform.

Updated FAQ Page

One of the most noticeable changes in Telegram’s approach is the recent revision of its FAQ page. Previously, the page stated that private chats were protected from moderation requests. However, this language has since been removed, signaling a shift towards a more proactive moderation strategy. The updated FAQ page now includes instructions on how users can report illegal content using the platform’s built-in tools.

Legal Issues

Durov’s arrest in France was related to allegations that Telegram was being used to distribute child sexual abuse material and facilitate drug trafficking. French authorities claimed that the company had been uncooperative in the investigation, leading to charges being filed against the CEO. This legal battle has prompted Telegram to reassess its content moderation practices to ensure that illegal activities are not taking place on the platform.

Telegram has faced significant challenges as its user base has grown rapidly to 950 million users. This surge in popularity has made it easier for criminals to exploit the platform for illegal activities. In response, Durov has vowed to improve moderation efforts and has already begun internal processes to address these issues. He has promised to provide more details on the progress made in enhancing content moderation on Telegram.

While Telegram has been used as a source of important information during events such as Russia’s war in Ukraine, the platform has traditionally maintained a hands-off approach to content moderation. However, the recent legal issues faced by the company have highlighted the importance of implementing stricter moderation policies to prevent the platform from being misused for criminal activities.

Telegram’s recent changes in content moderation policies reflect a shift towards a more responsible stance on ensuring the safety and legality of its platform. The company’s response to legal challenges and user concerns demonstrates a willingness to adapt and improve its practices to address the evolving landscape of online communication.

Leave a Reply

You must be logged in to post a comment.