When ChatGPT was first introduced in November 2023, it was only accessible through the cloud due to the size of the model powering it. However, fast forward to the present day, and we see a significant shift in the landscape of AI models. I am currently using a robust AI program on my Macbook Air that is not causing the device to overheat. This evolution highlights the rapid advancements in AI research, particularly in making models more compact and efficient.

Introducing Phi-3-mini and its Capabilities

The AI model running on my laptop, known as Phi-3-mini, is part of a new breed of smaller AI models developed by Microsoft’s researchers. Despite its smaller size, Phi-3-mini exhibits comparable performance to GPT-3.5, the model that powers the original ChatGPT. This exceptional performance was evaluated based on various standard AI benchmarks that assess common sense and reasoning abilities. In my personal testing, Phi-3-mini has proven to be just as proficient as its larger counterparts.

Exploring Multimodal AI Models

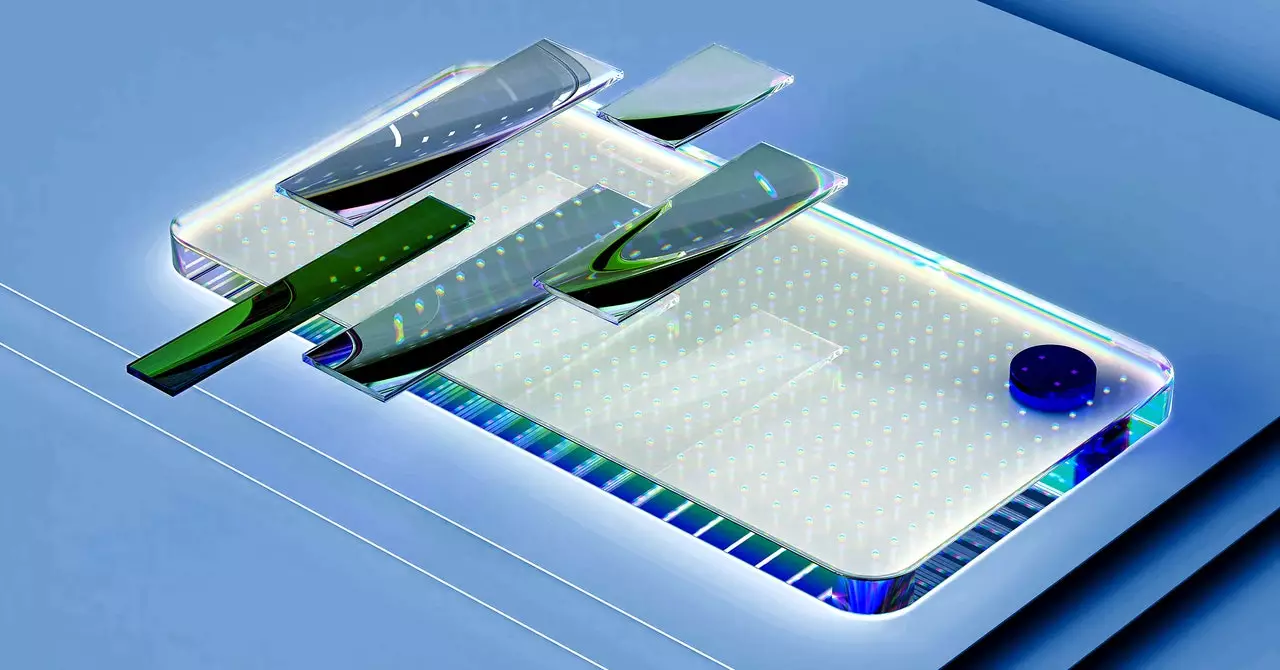

Recently, Microsoft unveiled a new “multimodal” Phi-3 model at its annual developer conference, Build. This advanced model is capable of processing audio, video, and text simultaneously, signaling a significant advancement in AI technology. The introduction of multimodal models opens up a plethora of possibilities for creating versatile AI applications that do not rely on cloud computing. This shift could lead to enhanced responsiveness and privacy in AI-driven solutions.

The development of Microsoft’s Phi family of AI models sheds light on a new approach to enhancing AI capabilities. According to Sébastien Bubeck, a key researcher at Microsoft, these models were designed to explore the concept of selective training data to refine AI performance. Unlike traditional large language models that ingest vast amounts of text data from various sources, the Phi models focus on quality over quantity. This selective training methodology aims to optimize the efficiency and effectiveness of AI systems without the need for excessive data input and computational resources.

The emergence of compact and efficient AI models like Phi-3-mini signifies a paradigm shift in AI development. The traditional reliance on massive data sets and computational power is being challenged by a more nuanced and strategic approach to training AI systems. As technology continues to evolve, there is a growing emphasis on fine-tuning AI models to enhance their capabilities while minimizing resource consumption. This shift not only improves the performance of AI applications but also raises ethical considerations regarding data privacy and power consumption.

The integration of smaller AI models like Phi-3-mini into modern technology showcases the ongoing evolution of artificial intelligence. By prioritizing efficiency, versatility, and optimization in AI development, researchers and developers are paving the way for a new era of smarter, more compact AI solutions that have the potential to revolutionize various industries. As we navigate the ever-changing landscape of AI technology, it is essential to remain vigilant in addressing the ethical and practical implications of these advancements to ensure a responsible and sustainable future for AI innovation.

Leave a Reply

You must be logged in to post a comment.