Large language models (LLMs) have become increasingly common in various applications, allowing for quick and accurate text generation and interpretation. However, one significant issue that has arisen with these models is the occurrence of hallucinations, where the model produces incoherent or inaccurate responses.

Researchers at DeepMind have recently proposed a new approach to address the problem of LLM hallucinations. By using self-evaluation and conformal prediction techniques, the team aims to help LLMs identify instances where they should abstain from providing a response. This method involves assessing the similarity between potential responses to a given query and determining if the model should refrain from answering to avoid producing non-sensical or incorrect information.

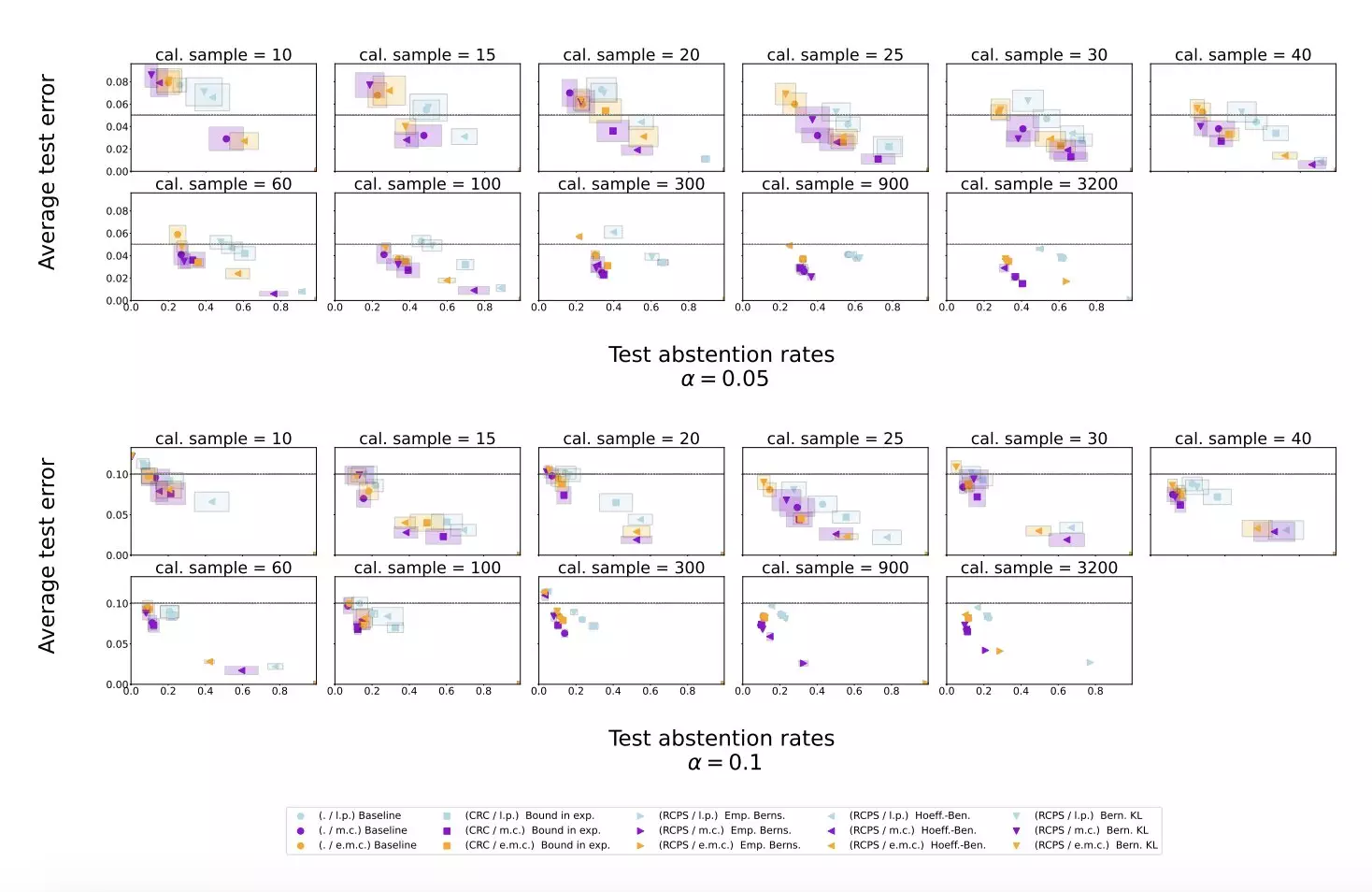

The researchers conducted experiments using publicly available datasets to evaluate the effectiveness of their proposed method. By applying the approach to the Gemini Pro LLM developed by Google, the team found that their conformal abstention method successfully controlled hallucination rates on various question-answering datasets. Additionally, the method exhibited less conservative abstention rates on datasets with long responses compared to traditional baseline scoring techniques.

The results of the experiments conducted by the research team indicate that the proposed method effectively reduces LLM hallucinations and improves overall model reliability. By outperforming baseline scoring procedures, the approach offers promising prospects for enhancing the performance of large language models.

This study by DeepMind highlights the importance of addressing hallucinations in LLMs to ensure the accuracy and trustworthiness of generated texts. Moving forward, the insights gained from this research could lead to the development of additional strategies to enhance the reliability of LLMs and prevent them from producing erroneous responses. Overall, efforts to mitigate hallucinations in large language models will play a crucial role in advancing the capabilities of these models and promoting their widespread adoption in professional settings worldwide.

Leave a Reply

You must be logged in to post a comment.