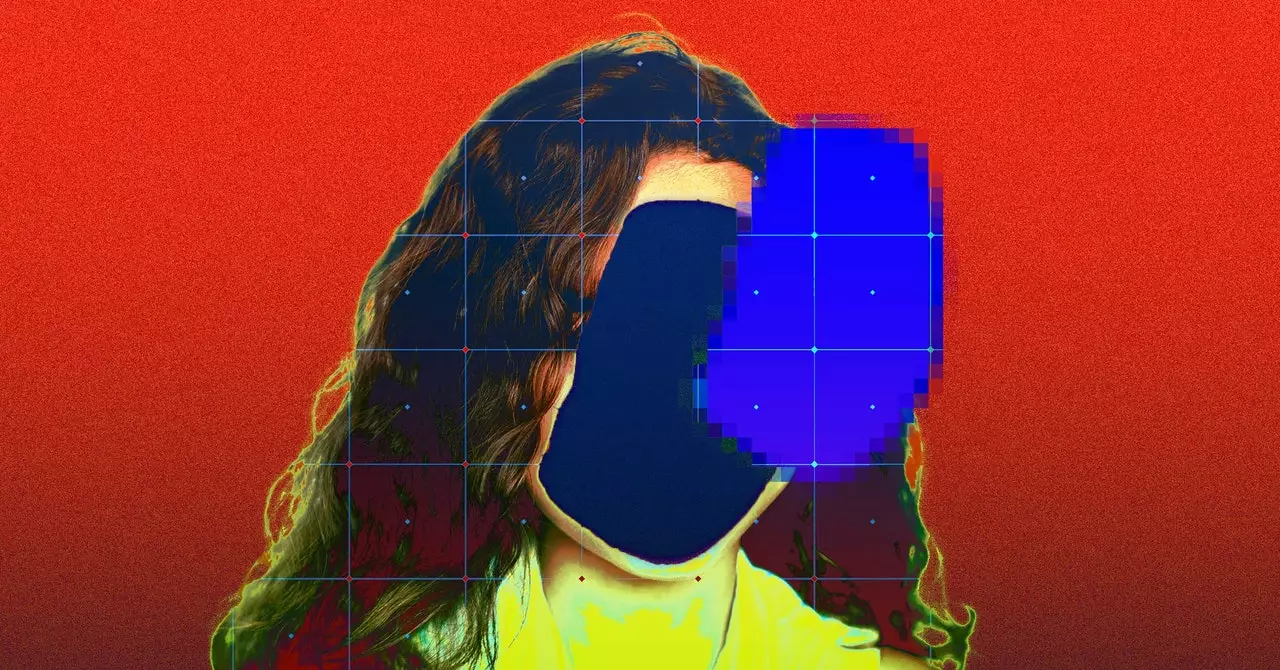

The unauthorized scraping and usage of personal images of children for AI training datasets have raised significant concerns regarding privacy and consent. A recent report from Human Rights Watch has shed light on the scraping of over 170 images of children from Brazil, dating back to the mid-1990s, without their consent.

The Human Rights Watch report states that children’s privacy is compromised when their images are scraped for AI training datasets like LAION-5B. These children’s personal details and links to their photographs were included in the dataset, putting them at risk of exploitation and manipulation by malicious actors.

Origin of the Dataset

LAION-5B, the dataset in question, is based on Common Crawl, a repository of data obtained through web scraping. It has been utilized by AI startups for training models, including image generation tools like Stability AI’s Stable Diffusion. The dataset contains billions of image-caption pairs, making it a valuable resource for AI research and development.

Following the discovery of illegal content linked to LAION-5B, the organization behind the dataset, LAION, has taken steps to remove such references. However, the method of scraping children’s images from sources like mommy blogs and personal videos raises concerns about compliance with YouTube’s terms of service, which prohibits unauthorized scraping.

Concerns and Risks

The misuse of children’s images for generating explicit deepfakes and potential exposure of sensitive information like locations or medical data pose significant risks. The rise of deepfakes in schools, particularly for bullying purposes, raises ethical and legal questions regarding the use of AI technologies fueled by unauthorized data collection.

Call for Better Regulations

In light of these privacy violations and risks associated with AI training datasets, there is a growing need for stricter regulations and oversight. Organizations like Human Rights Watch, Stanford University, and other child protection agencies must collaborate to safeguard children’s privacy and prevent the unauthorized use of their personal images for AI development.

The exploitation of children’s privacy through unauthorized scraping for AI training datasets is a pressing issue that requires immediate attention and action. By addressing the ethical and legal implications of using personal data without consent, we can protect vulnerable individuals, especially children, from potential harm and exploitation in the digital age.

Leave a Reply

You must be logged in to post a comment.