In the rapidly evolving world of artificial intelligence, particularly with language models, the scale of power has always been associated with size. The emergence of large language models (LLMs) from tech giants like OpenAI and Google, boasting hundreds of billions of parameters, has indeed set a benchmark in terms of capability. These parameters serve as fine-tuning levers, facilitating complex pattern recognition and deeper insights from massive datasets. Yet, the very attributes that render these models extraordinarily proficient also introduce significant drawbacks, notably financial and environmental costs.

Training an LLM demands staggering amounts of computational resources, reflected in Google’s reported $191 million expenditure on training its Gemini 1.0 Ultra model. This colossal investment raises a critical question: Are we pursuing complexity at the expense of accessibility and sustainability? Every interaction with these LLMs is an energy-consuming endeavor, with individual queries exhausting approximately ten times the energy of a single Google search query. This inefficiency calls into question the long-term viability of the path toward ever-larger models.

Small Language Models: A Paradigm Shift

In light of these challenges, there’s a burgeoning shift toward Small Language Models (SLMs) that use a fraction of the parameters of their larger counterparts, yet yield surprisingly admirable performance on specific tasks. Companies like IBM, Google, Microsoft, and OpenAI have gained notice for these smaller models, which typically max out around 10 billion parameters. While they lack the broad applicability of LLMs, SLMs excel in defined roles such as chatbots providing health information, summarizing conversations, or gathering data from connected devices.

Zico Kolter, a prominent computer scientist, affirms that for many applications, an 8 billion-parameter model can perform remarkably well. This efficiency allows SLMs to operate on everyday devices like laptops and smartphones, democratizing access to powerful AI tools. Rather than relying solely on massive data centers, SLMs open up a world where AI can fit into the palm of your hand, literally and metaphorically.

Taking a Lesson from the Giants: Knowledge Distillation

A pivotal development in the efficiency of SLMs comes from a technique known as knowledge distillation. This innovative approach utilizes the outputs of LLMs to refine a high-quality training dataset for smaller models, essentially transferring learning from one model to another much like a teacher imparting knowledge to a student. By emphasizing curated, clean data rather than the chaotic information commonly scraped from the internet, SLMs achieve impressive performance with significantly less resource investment.

The capacity for smaller models to utilize cleaner data sources without the clutter and noise of large-scale internet data is commendable. According to Kolter, “SLMs get so good with such small models and such little data because they use high-quality data instead of the messy stuff.” This distinction reinforces the value of efficiency over mere size.

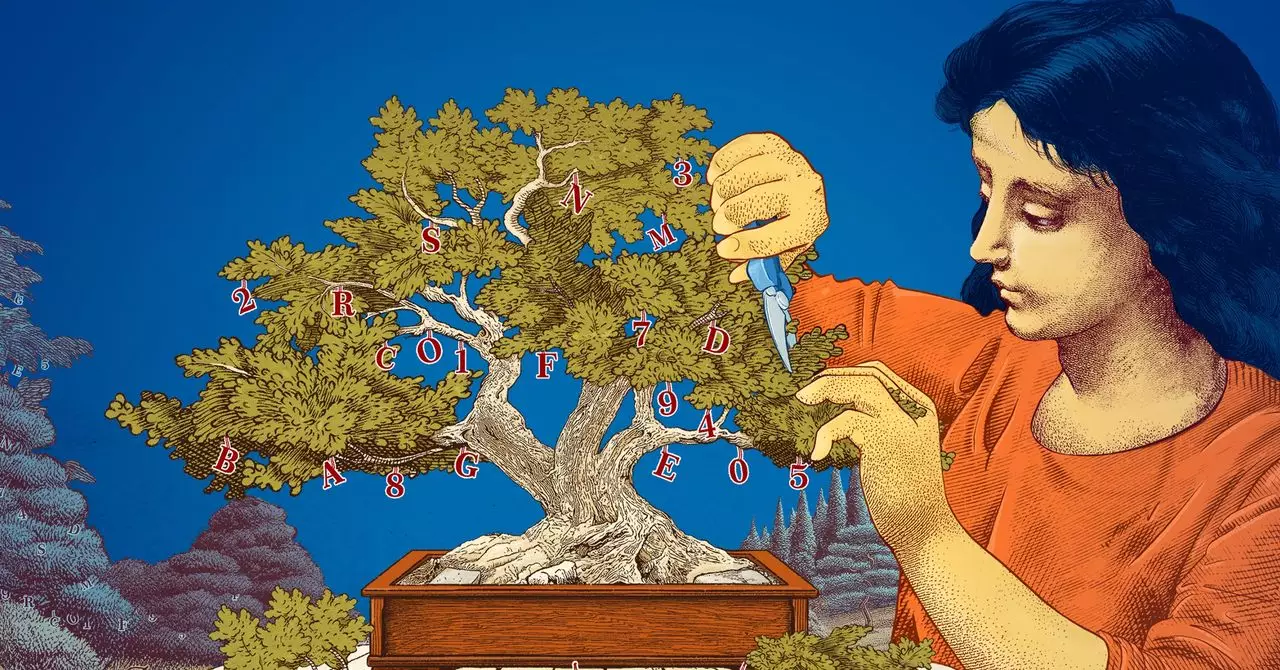

Pruning: Efficiently Taming the Neural Network

Another compelling approach to creating SLMs is via the pruning technique, inspired by the efficiency of the human brain. Pruning involves systematically removing unnecessary connections within a neural network, potentially eliminating up to 90% of parameters without sacrificing on performance. This resembles the natural process where our brains streamline their connections as we age, fostering greater clarity and speed.

Historically traced back to a seminal paper by computer scientist Yann LeCun, pruning, or “optimal brain damage,” underscores the possibility of retaining efficiency even as we cut down on complexity. Researchers now find that they can fine-tune SLMs for specific tasks, thus providing an opportunity for innovation in AI with lower stakes involved.

Leshem Choshen from the MIT-IBM Watson AI Lab emphasizes that smaller models offer an inexpensive arena for experimentation. The interplay of fewer parameters lends itself to greater transparency in reasoning, enabling researchers to dissect and understand AI behavior more easily.

Efficiency Meets Application: The Future of Language Models

While LLMs continue to play a crucial role in general applications—ranging from chatbots to sophisticated drug discovery—there remains a critical niche for SLMs. As researchers increasingly adopt these efficient models, they not only save resources but also reduce the associated risks inherent in deploying complex, high-cost systems. This evolution signals a turning point in AI development and application.

SLMs represent a future where efficiency and practicality are prioritized, enabling broader usage across diverse sectors without the hefty computational burden. Such progress could dramatically reshape how we perceive and utilize artificial intelligence, paving the way for innovative solutions that are both powerful and sustainable. In an era marked by energy crises and financial constraints, it seems we might have finally found the sweet spot between efficacy and accessibility in the exciting field of AI.

Leave a Reply

You must be logged in to post a comment.